Actions

From Projects to Products

Why AI Demands a New Kind of Leader

The Cost of Waiting

The world changed yesterday. While executives debate AI strategy, their competitors are already embedding AI into the core operating system of their business, reorganizing around product operating models, and elevating AI-literate leaders to central roles. These organizations won't just deploy better models—they will learn faster, adapt workflows more quickly, and align technology and strategy more tightly than those that remain trapped in project thinking.

The question for executives now is not whether to adopt AI tools, but whether their operating model and leadership bench are designed for an AI-first reality. That requires cross-functional, outcome-driven teams and, critically, leaders who are simultaneously deep in the business, fluent in AI, and capable of bridging everything in between.

This white paper describes that model, defines the bridge leader archetype, and offers a pragmatic roadmap grounded in both industry research and real-world implementation. Because theory without practice is speculation—and practice without theory is luck.

Why the Traditional Model Fails for AI

Before we can talk about what works, we need to understand why the traditional approach to technology projects breaks down when AI enters the picture. For decades, organizations have relied on a familiar playbook: define requirements, build to spec, deploy, and move on. That model made sense for rule-based systems. But AI is fundamentally different, and forcing it through the old process is why so many AI initiatives stall in pilot purgatory.

The Project-Based Paradigm

For decades, enterprise technology has been delivered through a familiar sequence: subject matter experts define requirements, business analysts translate them into specifications, and IT or vendor teams build and deploy systems. This project-based model assumes three things that made sense for traditional software:

- Stable requirements: The business need is well-understood at the outset and doesn't change significantly during development.

- Deterministic behavior: Once the code is written and tested, the system behaves predictably and consistently.

- A clear finish line: There's a go-live date, after which the system enters maintenance mode with infrequent updates.

This model worked reasonably well for enterprise resource planning systems, customer relationship management platforms, and claims administration systems. These are complex, but they're fundamentally rule-based. The logic is explicit, coded once, and stable.

Why AI Breaks the Assumptions

AI systems break all three assumptions. They are probabilistic, data-dependent, and deeply intertwined with evolving business context, regulations, and user behavior.

- Requirements are never stable: An AI system that triages claims or scores underwriting risk must adapt as claim patterns shift, fraud tactics evolve, or regulatory standards change. What worked last quarter may not work this quarter.

- Behavior is probabilistic, not deterministic: A traditional system routes a claim to the right adjuster 100% of the time if the rules are correct. An AI system predicts the right adjuster with 87% accuracy—and that accuracy depends on the quality and recency of the training data.

- There is no finish line: AI systems require continuous monitoring, retraining, and refinement. Performance degrades over time as the world changes. New edge cases emerge. User expectations evolve. The system is never "done"—it's a living product that demands ongoing care.

"When AI is forced through waterfall-style handoffs, translation losses accumulate at every stage. Core questions about value, risk, and feasibility are answered too late or not at all."

The Pilot Purgatory Problem

Industry research on AI initiatives repeatedly highlights what's become known as "pilot purgatory." Organizations launch dozens of proofs of concept, often with impressive initial results. A fraud detection model achieves 92% accuracy in testing. A claims triage system reduces manual review time by 40% in a controlled pilot. An underwriting assistant generates policy summaries that impress executives in demos.

But few of these pilots reach scaled, stable production. They remain isolated experiments instead of integrated products with clear ownership and continuous care. Why? Because they're treated as IT projects with a beginning, middle, and end—when they actually require product thinking, cross-functional ownership, and continuous iteration.

Boston Consulting Group's 2025 analysis found that companies stuck in this pattern waste 60-70% of their AI investment on pilots that never scale. Meanwhile, companies that have redesigned their operating models around AI are achieving 3-5x faster time-to-value and 40-50% higher success rates.

The AI-First Operating Model

From Side Projects to Operating System

An AI-first operating model starts from a fundamentally different premise: AI is part of the enterprise's operating system, not a series of side projects. Rather than asking, "Where can we deploy a model?", leaders ask, "How should our claims flow, underwriting, customer service, or supply chain operate now that AI can augment or automate major decisions?"

In this view, AI capabilities—prediction, generation, classification, optimization—are reusable services that support end-to-end value streams such as fraud detection, loss-cost control, churn prevention, or cross-sell. The question isn't whether to use AI, but how to redesign the entire workflow to take advantage of what AI makes possible.

The Central Shift:

From Projects to Products

Project Thinking

Organizes temporary teams around delivering a defined scope within a fixed timeline and budget. Success is measured by on-time, on-budget delivery of requirements. Once the project is complete, the team disbands and the deliverable enters maintenance mode.

Product Thinking

Organizes persistent teams around customer or business outcomes that are measured continuously over time. The team owns the problem space indefinitely and treats the solution as a living system that evolves based on data and feedback.

Cross-Functional Teams as the Default

To make product thinking real, AI-first organizations use cross-functional teams as the default unit of work. A typical high-performing AI product team includes domain experts, a product owner, data scientists and ML engineers, software and data engineers, risk and compliance partners, and UX practitioners.

As research on cross-functional AI teams emphasizes, the product manager serves as "the bridge between business users, data scientists, and data engineers." Value comes when these skills sit together in a single, stable team instead of across multiple departments separated by organizational walls and multi-month handoffs.

The Bridge Leader Archetype

Within an AI-first operating model, leadership capability becomes the real constraint. Traditional executives could succeed while remaining largely non-technical, relying on IT to translate strategy into systems. In an AI-first environment, that is no longer viable.

Commentators on AI and leadership increasingly argue that "AI-literate leaders will replace those who aren't." This doesn't mean every leader needs to become a data scientist. It means they must understand AI's capabilities, limitations, data needs, and risks well enough to make informed strategic and design decisions.

Domain & Business Depth

A track record of owning outcomes in a specific area and understanding incentives, constraints, and what "good" looks like

Product Mindset

Ability to design end-to-end workflows, frame problems, prioritize, experiment, and iterate continuously

AI & Data Fluency

Ability to discuss data sources, model behavior, and risk controls with technical teams and translate to business implications

The "Unicorn" Problem

This archetype is often described as a "unicorn"—someone who is simultaneously a domain expert, a product thinker, and technically fluent. And like any mythical creature, unicorns are hard to find in the wild.

No enterprise can depend solely on finding these rare individuals. But where bridge leaders are found or developed, they create outsized leverage. They compress decision cycles, reduce translation loss between business and tech, lend credibility across functions, and can make trade-offs in real-time because they understand both the business impact and the technical feasibility.

From Theory to Practice

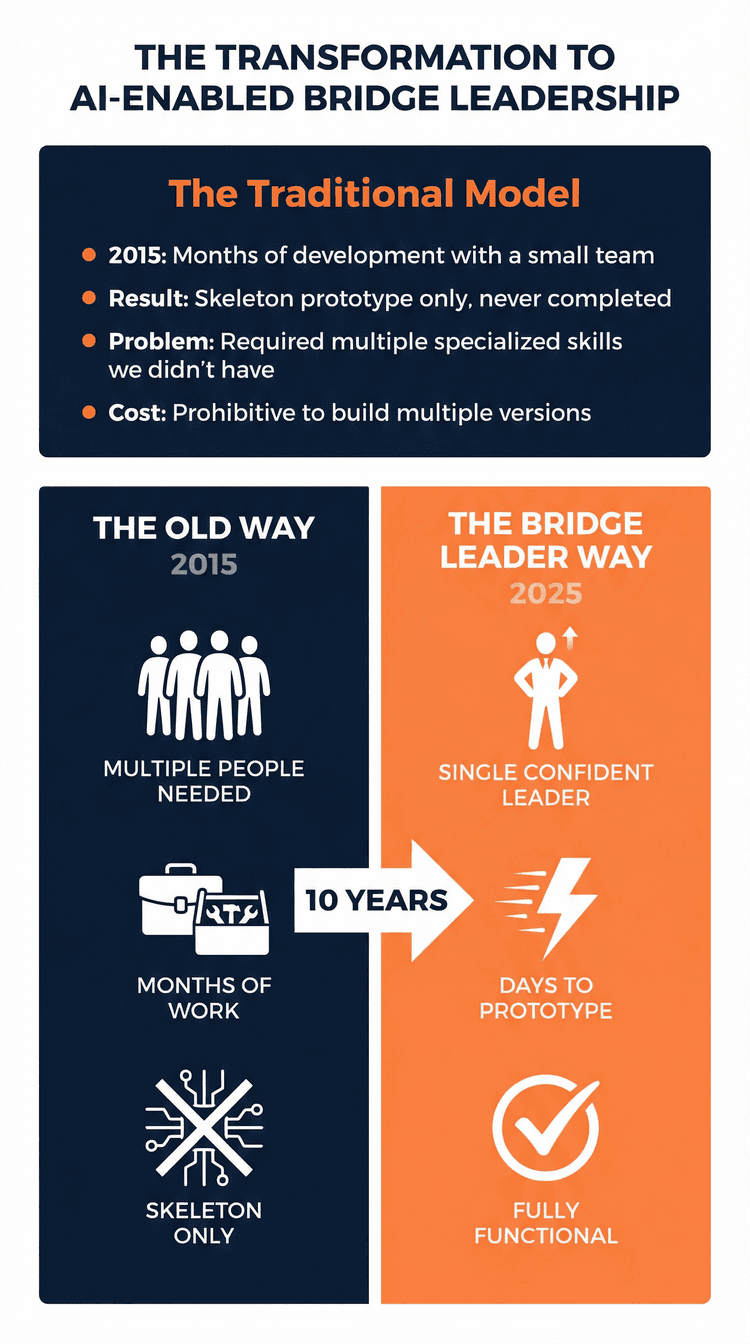

Ten years ago, I was one of those frustrated domain experts. After 25 years in insurance claims, starting as a litigator defending doctors against malpractice allegations and then moving into claims management and operations, I knew exactly where the pain points were. One of the biggest was the audit process.

The Old Way: Shoestringing a Dream

Every claims organization audits files to ensure quality, compliance, and consistency. But the process was stuck in spreadsheet hell. Audit owners would spend hours creating Excel templates, manually distributing assignments, and then days consolidating results and checking for errors.

I saw a clear need for a dedicated system that would automate the busywork, provide real-time visibility, and eliminate the errors that came from manual consolidation. I partnered with a developer, and we set out to build it. I was the SME; he was the builder. We were following the traditional model. We called it the Audit Portal and the concept was well receieved within the industry.

After months of work, all we had was a skeleton. I couldn't even show a completed prototype to potential customers. My demos were a series of apologies: "Now, this isn't built yet, but the vision is that it would do this…"

The problem wasn't lack of effort. We were shoestringing it. We really needed a front-end developer, a back-end developer, a database expert, a UX designer, and a business analyst. But we had one developer who didn't have all those skills. The project eventually fizzled out. Another good idea lost in the gap between business need and technical execution.

The Transformation: Learning to Build

Fast forward ten years. When ChatGPT launched in late 2022, I, like many, started experimenting. At first, it was basic: drafting documents, summarizing articles. But about a year in, I started using it more seriously for my consulting practice.

Then came the "aha" moment. I realized I could ask an AI agent to build an HTML page to show someone what a possible business solution would look like. I could describe a user interface, and within 15 minutes, I had a working screen to look at. From there, my learning growth skyrocketed.

I went from multi-HTML pages that "would have been laughed at by true IT professionals" to using Cursor, GitHub, Vercel, Supabase, Tailwind, Next.js, and more. The key difference was that now I had AI agents that knew all of these things and could build for me. I wasn't trying to become a professional developer. I was trying to become fluent enough to direct AI agents effectively and understand what they were building.

"Last year, I spun up a working prototype in days. A tool with ten times the features of the one that took months to fail a decade ago will now take weeks to complete. I had become a bridge leader."

The Proof

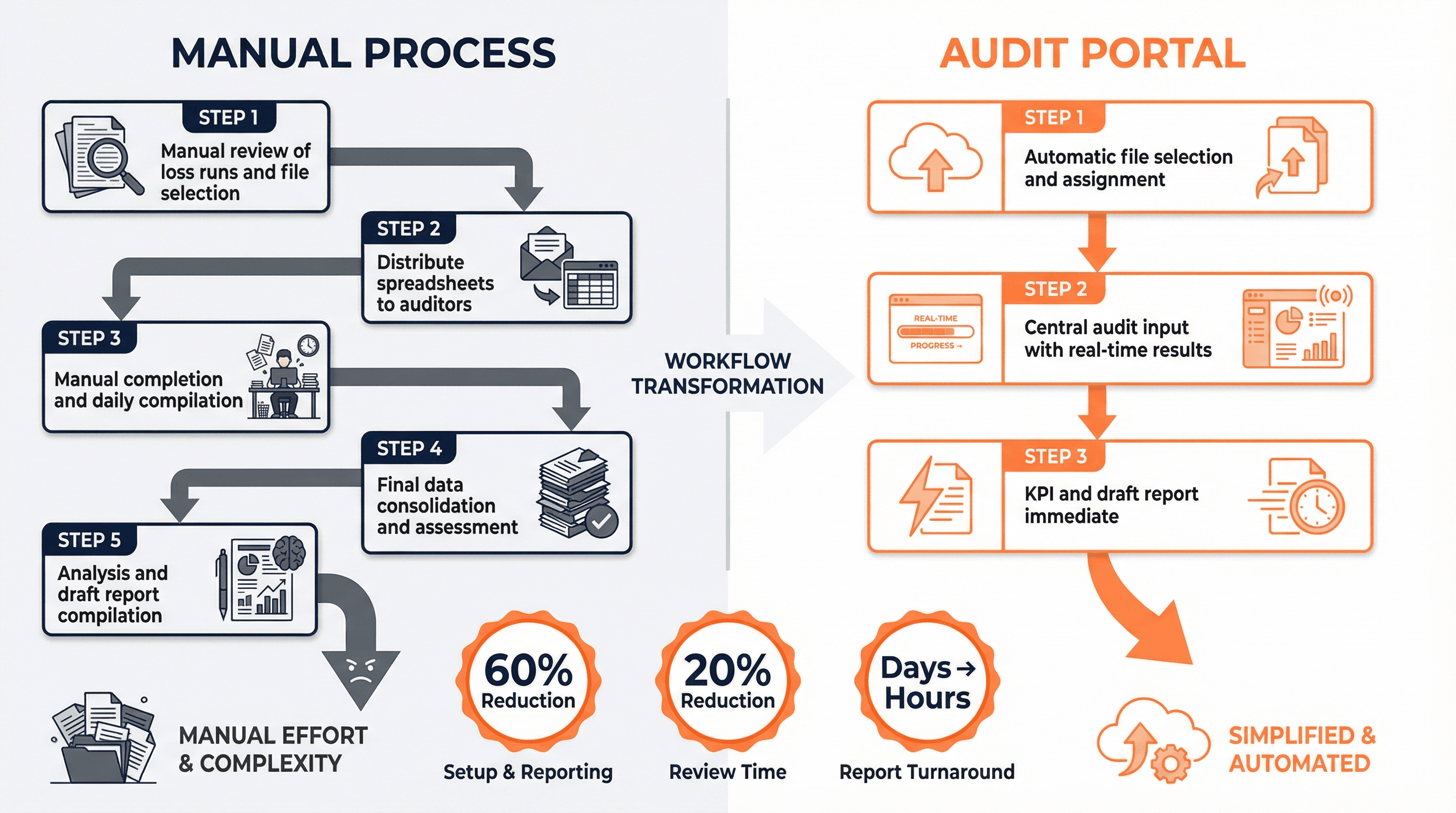

I approach my consulting practice with this process every day with real results. The Audit Portal, I tried to build the old way was finally a not a theory but a a reality. It's a functioning application that transforms a core claims process. Let me walk you through exactly what it does and why it matters.

The Transformation in Action

Look at the diagram below and you'll see why this matters. The left side shows what I was trying to escape ten years ago: five painful steps that turn a simple audit into a multi-day ordeal of spreadsheets, emails, manual data entry, and error-prone consolidation. Every claims organization knows this pain. The right side shows what I built: three clean steps where the system handles the busywork and gives you real-time visibility into what's actually happening. The numbers at the bottom aren't projections, they're what we're seeing in practice. Setup and reporting that used to take days now takes hours. Review time drops by 20%. And the final report that David used to spend two days compiling? It's ready in minutes.

Transforming the audit workflow from manual complexity to streamlined efficiency

The transformation shows why this matters. The old way involved five painful steps that turn a simple audit into a multi-day ordeal of spreadsheets, emails, manual data entry, and error-prone consolidation. Every claims organization knows this pain. The new approach shows three clean steps where the system handles the busywork and gives you real-time visibility into what's actually happening. Setup and reporting that used to take days now takes hours. Review time drops by 20%. And the final report that used to take two days to compile is ready in minutes.

A Modern Claims File Review: Then and Now

To make this concrete, consider what this looks like for two real people in a typical claims audit scenario. David is the audit owner responsible for managing the entire process. Sarah is one of six examiners assigned to review claims. Their experience illustrates exactly why this transformation matters.

The "Before" State

An audit owner like David would spend his Monday morning building the audit infrastructure: creating master spreadsheets, manually distributing claim assignments via email to six reviewers, setting up a separate tracker for QC items. By the time he was done, it had been 4-6 hours.

The examiners, like Sarah, would receive emails with Excel spreadsheets. They'd download separate spreadsheets with audit questions, open each claim PDF manually, and begin copying information. By 10 AM, Sarah had completed three claims, manually typing answers, calculating scores on scratch paper.

On Friday, David would spend six hours collecting everyone's individual spreadsheets, manually consolidating responses, checking for calculation errors, and building PowerPoint slides. The final report would take two days to compile and often contained reconciliation errors.

The "After" State

Today, David spends 45 minutes on Monday morning in the Audit Portal's Admin Console. He creates the new audit, selects the pre-configured question library, uploads the claim file, and assigns reviewers with a few clicks. The system automatically distributes assignments.

Sarah logs into the Portal and immediately sees her 25 assigned claims with priority rankings. She answers questions using radio buttons and dropdowns, and the system auto-calculates scores in real-time. By 10 AM, she's completed 12 claims. At day's end, she's audited 22 claims.

On Friday, David clicks "Generate Report" and the system produces a comprehensive executive report with findings, compliance breakdowns, and recommendations in minutes. The data is automatically accurate because it comes from a single source of truth.

This is what bridge leadership looks like in practice. I didn't just identify the problem, I built the solution. I didn't need a team of specialists or months of development. I needed domain expertise, product thinking, and enough AI fluency to direct the tools that could build what I envisioned.

When I show executives these results, they have their own "aha" moment. They're being slammed with pressure to "do something with AI" but have no idea what that means. This portal gives them a concrete answer with measurable ROI. It opens their eyes to possibilities they wouldn't have considered before, like doing more audits since they can now be completed more efficiently. The Audit Portal isn't just faster; it enables work that wasn't previously feasible.

What the Consulting Firms Miss

I've discussed this approach with a number of high-profile executives in the IT space, and they think this is where things are moving. They acknowledge that some of their biggest issues stem from a disconnect between the SME and IT, and this approach can solve that.

The consulting firms are right about the problem. Their research on AI-first operating models, product thinking, cross-functional teams, and bridge leaders is accurate and valuable. They've documented the patterns that successful organizations are following.

But here's what they miss: they're too big, cost too much, and don't truly have the SME for their clients' operations. They're not nimble enough, and they look to handle large-scale change. I used to work at one, FTI Consulting, so I know this firsthand.

This is the model I'm now using in my consulting practice. I don't sell software. I partner with companies to act as their embedded bridge leader, working with their IT teams in their secure environment to build the solutions their business needs. I look to be an extension that relieves IT departments of resource allocation challenges.

A Practical Roadmap

01 Diagnose Your Current Model

Before you can move to an AI-first operating model, you need to understand where you are today. Assess your organization along several key dimensions: Project vs. Product, Functional vs. Cross-Functional, Tech-Centric vs. Business-Led, and Leadership AI Literacy. Be honest about where you are, most organizations are still heavily project-based and functionally siloed.

02 Reorganize Around Priority Value Streams

Don't try to transform everything at once. Select two or three high-leverage areas where AI could have significant impact. For each area, stand up a cross-functional AI product team with a clear mandate and specific outcomes to improve. Give these teams budget and decision rights to iterate. Make these teams persistent, they don't disband after the first version is deployed.

03 Build Shared AI Platforms

Invest in shared platforms that provide reusable capabilities: data infrastructure with standardized pipelines, model development and deployment tools, monitoring and observability dashboards, and governance frameworks with built-in controls. The goal is to let teams focus on solving business problems rather than reinventing infrastructure.

04 Develop Bridge Leaders Intentionally

You need to develop bridge leaders who can operate effectively in this new model. This is scalable if companies foster this capability in their people. Identify high-potential candidates, provide targeted AI literacy programs, create immersive experiences through rotations on AI teams, and define career paths for roles like "AI Product Owner" where individuals are accountable for both business outcomes and AI-enabled solutions.

Ready to Build Your Bridge?

Let's discuss how you can develop bridge leaders in your organization and start delivering real AI results.

Schedule a ConsultationThe World Changed Yesterday

The shift I'm describing is not speculative; it's already underway. Organizations are embedding AI into the "core operating system" of the business, reorganizing around product operating models, and elevating AI-literate leaders to central roles. These organizations will not just deploy better models—they will learn faster, adapt workflows more quickly, and align technology and strategy more tightly than those that remain trapped in project thinking.

I am proof that the bridge leader model works. The Audit Portal is proof that the results are not incremental, but transformative. And the response from executives who see it is proof that this approach resonates with real operational needs in a way that abstract AI strategies do not.

The question for executives is no longer whether to adopt AI, but whether you are developing the leaders who can wield it effectively. That requires moving from project thinking to product thinking, from functional silos to cross-functional teams, and from IT-led initiatives to business-led transformation.

"The consulting firms can help you understand the problem and design the future state. But they can't be your bridge leaders. Those have to come from within."

The world changed yesterday. The question is whether your operating model and leadership bench are designed for an AI-first reality. If not, the time to start building is now.

References

- Algoworks. (2025). How AI literacy empowers business leaders to drive growth.

- Boston Consulting Group. (2025). How companies can prepare for an AI-first future. Retrieved from https://www.bcg.com/publications/2025/how-companies-can-prepare-for-ai-first-future

- Chief AI Officer. (2025). AI won't replace leaders, but AI-literate leaders will replace those who aren't.

- Data Science PM. (2024). AI product owner. Retrieved from https://www.datascience-pm.com/ai-product-owner/

- Dialzara. (2026). Cross-functional teams for AI success: Guide.

- Energy Training. (2025). AI literacy for leaders – What every executive must understand now.

- European Business Review. (2025). AI literacy: A leadership imperative.

- Infosys. (2023). Tech navigator – The AI operating model. Retrieved from https://www.infosys.com/technavigator/2023/documents/ai-operating-model.pdf

- Meridian AI. (2025). The AI-first dilemma: Product or operations first?

- Mind the Product. (2025). Why AI development demands product operating models.

- Product School. (2024). AI product owner: The role you and businesses want in 2026. Retrieved from https://productschool.com/blog/artificial-intelligence/ai-product-owner

- Product School. (2024). Product operating models: How top companies work.

- SoftwareMind. (2025). Project vs product: Why companies are turning to a product-driven approach.

- ThinkIA. (2025). Enterprise AI strategy: A guide to the AI-first operating system.

- Tribe AI. (2025). AI product development vs software development: A strategic guide.